How to: Compete with Amazon S3 without Buying Hardware

You build a storage router.

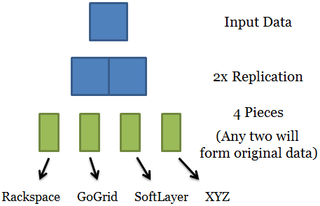

Given a piece of customer data you want to store, replicate it 2x, and store half a copy's worth across four different raw storage providers. For example, Rackspace gets one half a copy, as does SoftLayer, GoGrid, and Storm on Demand. Keep in mind that you're buying raw storage from each of these guys, not redudant storage. Each provider is just selling you hard drives attached to the Internet.

Your customer's data will be available as long as two of the four raw storage providers are up.

(To be clear, you would use Reed-Solomon erasure codes. Thus, you could dial in any desired replication factor and any desired number of storage endpoints. [1] The blocks in the diagram below are intended to represent the sizes of the data pieces, not how they are encoded.)

This design could provide more independent failure modes than Amazon S3.

More importantly, this design would create a marketplace for people selling raw storage. Whenever someone comes in with a better price, you seamlessly move customer data from the most expensive of your providers to this newer, cheaper one. Of course you would maintain appropriate quality controls [2], monitor uptimes, backbone redundancy, etc. You're a storage router.

Amazon S3 enjoys high margins today

Amazon's storage offering has built up high margins. Up until two months ago, Amazon S3 hadn't dropped prices since its launch in 2006, despite the fact that hard drive costs fall 50% per year. [3]

In 2006 a 320 GB hard drive cost $120. Today (Thailand floods aside) that much money will snag you a 3 TB drive.

Those are real margins that Amazon has built up, and even after their recent price drop they're still leveraging a lot of pricing power. There's surprisingly little competition.

The Android strategy for the cloud storage market

Just as cell phone OEM's like Samsung and HTC didn't know how to compete with the iPhone, the guys left in the dust by Amazon (Rackspace, SoftLayer, and moreso the hundreds of small hosting companies out there) don't know how to compete.

What I'm proposing is the Android strategy because it gives them a way to compete, and they're competing on an axis that the new winner doesn't want to compete on: price.

And in this case, since you're running the service that routes customer data into and out of the right storage providers, you control the relationship with the customer, so you get the pricing power.

The challenges

There are a couple of reasons why I'm not pursuing this idea (in increasing order of importance):

- Selling storage requires a brand that people trust. That could be built, but is a very big friction point at the most inopportune moment - the beginning.

- It's a low margin business, and you'd probably need a sales team eventually. Both of those are unfortunate.

- Amazon Web Services has revenue from a number of complimentary services (most importantly EC2) that they could use to make storage a loss leader, sucking all of the oxygen out of the room. Amazon did this in 2010 to diapers.com. They know how to run and compete in low margin businesses. Amazon would also compete by giving deep discounts to large customers who suggest they might use this service instead. Amazon's dominance and ecosystem is the most important weakness of this idea.

Who then?

The world would be a better place with a service like this, possibly extending into a marketplace for compute as well. Someone like Rackspace or Softlayer could do it. Google could do it. A startup could do it. Or maybe OpenStack will adopt a model like this.

If this happens, though, I think it will be done by a startup.

What Rackspace, SoftLayer, and others should do now

The big hosting guys should launch a low priced "hard drive attached to the Internet" product. [4] Such a move would pave the way for one or more startups to build the storage router, bringing a new type of innovation to cloud computing.

Notes

[1] The replication doesn't need to be uniform, either. For example, if read performance is important maybe one storage provider has a full copy of the original data, and there's another 1.5 copies spread across three other providers for redundancy. The ideal setup depends on the customer's desired tradeoffs. This advanced level of control might also be a competitive advantage for some storage customers.

Update, Sept 2012: You could also consider using the new Amazon Glacier for end-of-line backup, in combination with hot copies hosted by others.

[2] There are many types of quality controls to build. Use a provable data possession protocol to ensure that each storage provider still has its data without having to retrieve the whole thing.

[3] This is strictly true for their first tier of storage customers. Over time they've published volume pricing for higher and higher volumes, though each tier's pricing never dropped. Amazon S3 pricing history: 2006, 2007, 2008, 2009, 2010.

[4] Such a product should be designed for the storage router use case, so it should include all of the API's the storage router will need, including provable data possession.